On this page

.png)

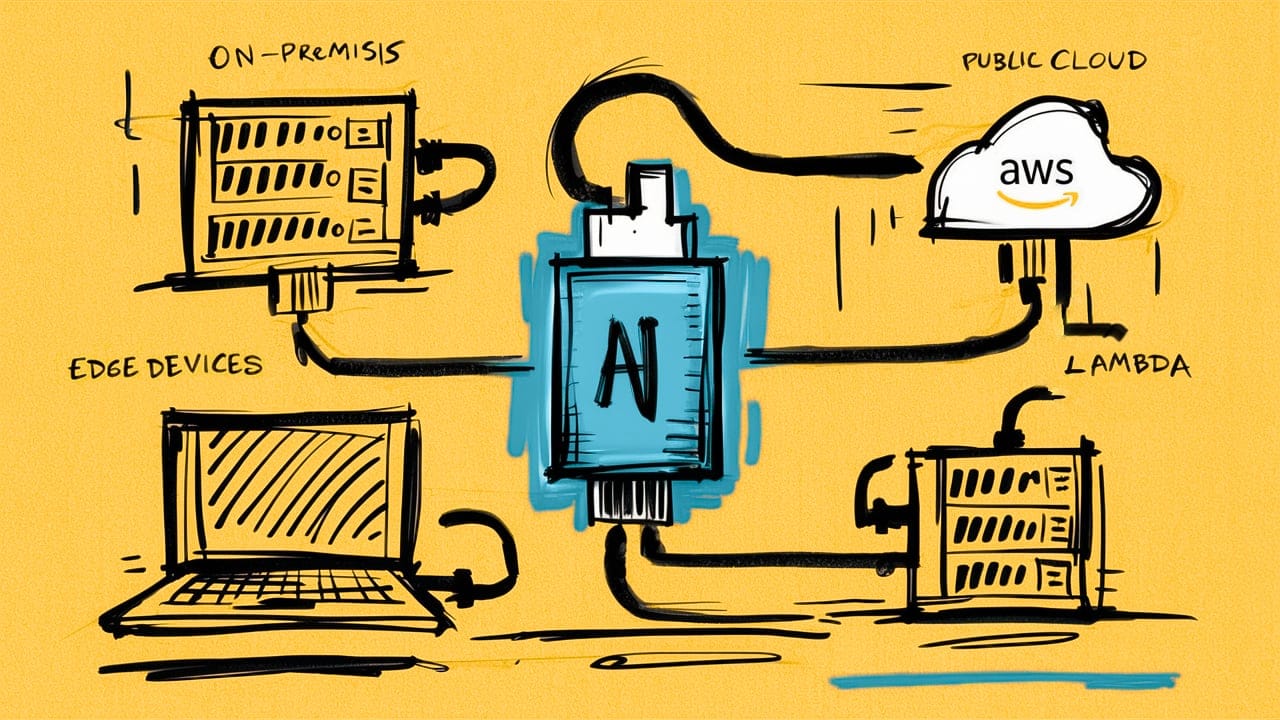

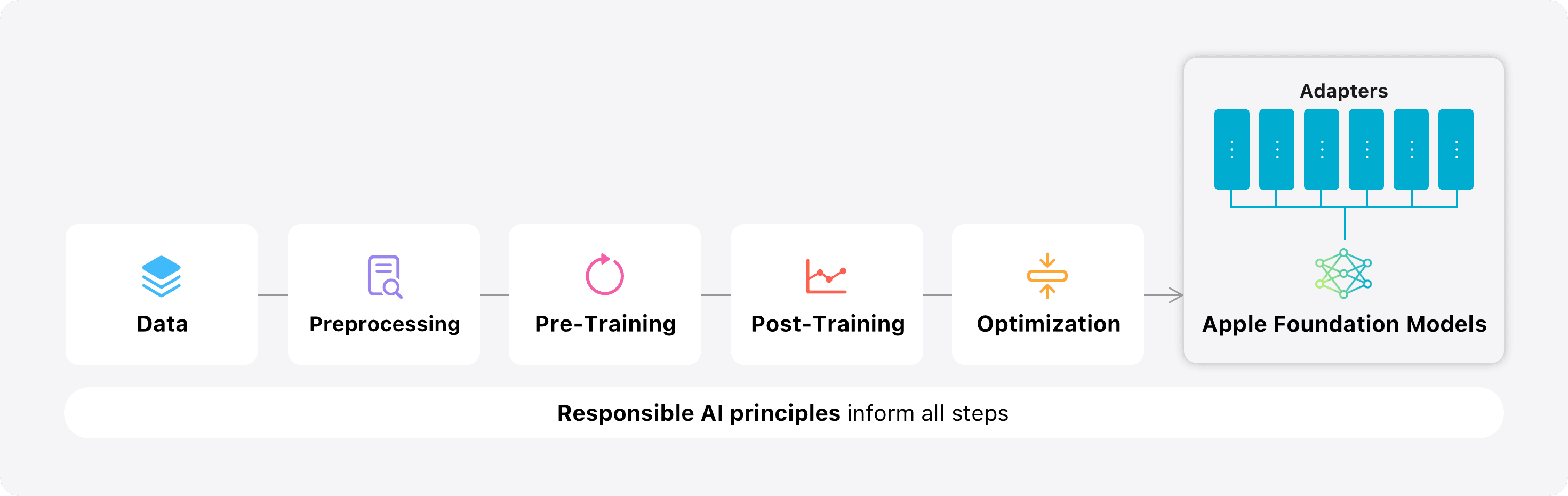

In late June we did a deep dive into Microsoft's & Apple's integrated ecosystems and the overall impact of AI inference moving to the edge. Both platforms will impact how we use AI for Lifestyle + Business for years to come. At the heart of each are Small Language Models (SLMs), such as Microsoft's Phi series, which leverage on-device hardware to accomplish specific tasks. No where was the groundbreaking SLM architecture more pronounced than Apple's adapter architecture. Where small collections of model weights are overlaid onto a common Small Language Model to eke out specialized features such as summarization or image generation.

The SLM Advantage

Small Language Models may initially seem like an optimization technique used by hyperscalers. However, they are poised to become a major component of your organization’s AI strategy. Matthew Berman aptly described this in the context of Apple Intelligence…

They are obviously taking the very verticalized approach to AI. They are not building a generalized world model. They are building, very narrow, specific models, for specific use cases and I actually believe that architecture is going to take us really far... and if I were to bet - I would bet on that architecture - over the generalized world model approach.

Not convinced yet? Take a closer look at Meta's bold strategy with the recent release of the open-source Llama 3.1 series of models. Mark Zuckerberg's approach to AI is nothing short of revolutionary. By open-sourcing the entire Llama architecture, Meta is effectively making advanced AI models ubiquitous and turning them into a commodity. This "scorched earth" tactic isn't just altruism - it's a calculated business move that will result in reshaping the AI landscape.

... I think you're just going to see this wide proliferation of models where people now have the incentive to basically customize, build, and train exactly the right size model for what they're doing... they're going to have the tools to do it because of a lot of new partner integrations...

By democratizing access to powerful AI models, Meta is creating an environment where AI becomes a ubiquitous tool, available to businesses of all sizes. This opens up immense opportunities for companies like Arcee Cloud, who can leverage these open-source models to create niche, fine-tuned AI solutions for specific business needs. As AI models become a commodity, the real value will lie in customization, deployment, and integration - precisely the areas where an agile and specialized company like Arcee can thrive.

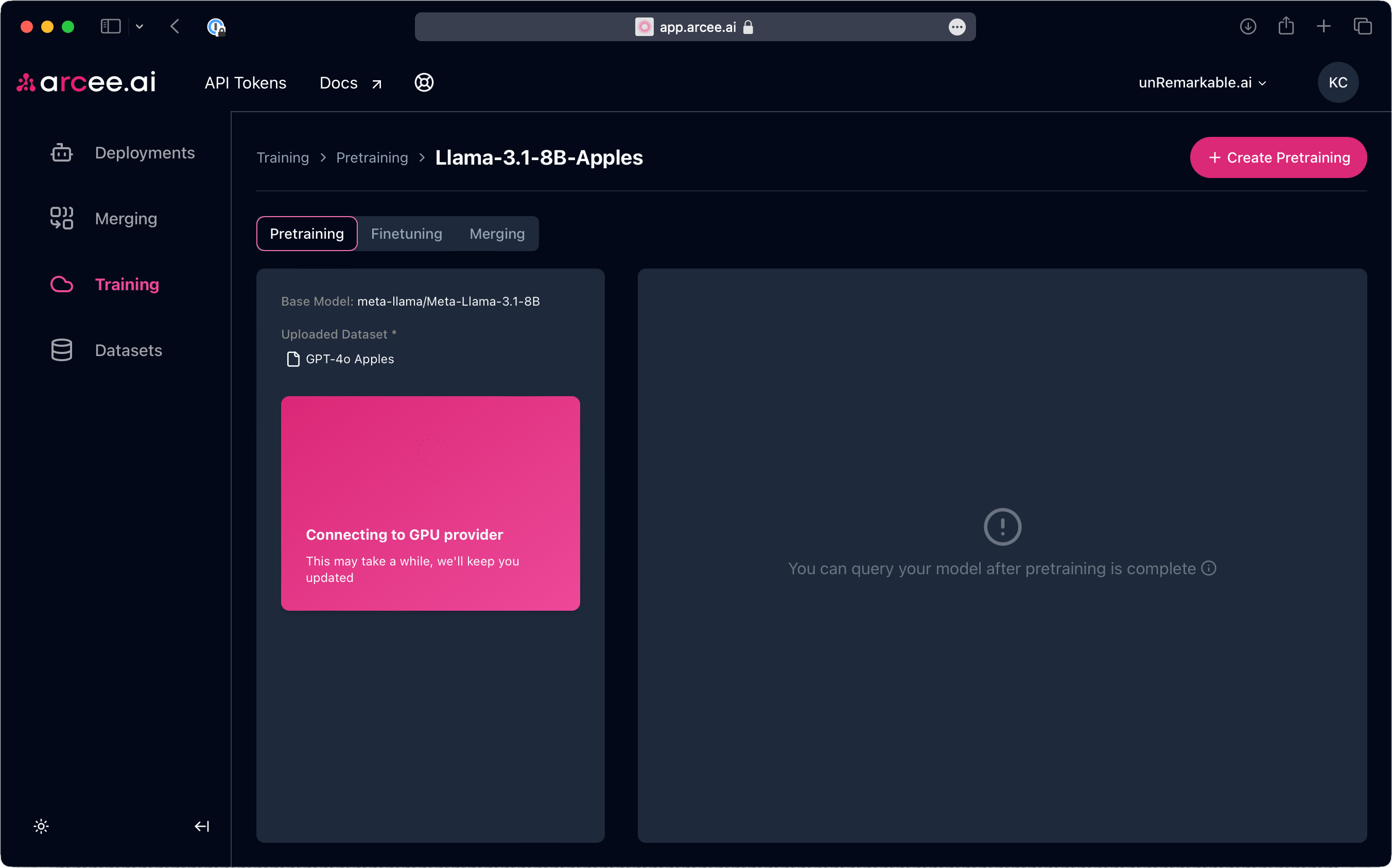

Days after the Llama 3.1 release, Arcee was right at the forefront offering this new model for their customers to fine-tune, pretrain, and deploy.

I created a synthetic dataset using a larger teacher model such as GPT-4o, then fed it into their new continuous pretraining feature to deploy my own customized version of Llama 3.1 8B. Yes, RAG is still a critical architecture, but think about how much better it could be with more embedded knowledge. Consider how much better enterprise SQL Agents behind products such as Vanna.AI could be when in-context learning (few shot examples) falls flat or lacks accuracy. Arcee makes it as easy as connecting an S3 bucket, and you get a newly deployed model on a schedule with updated knowledge.

Let's explore Arcee in detail and what makes them unique.

The Arcee Advantage

Arcee AI stands out with its innovative approach to Small Language Models. Unlike competitors focused on large language models (LLMs), Arcee AI specializes in creating custom, domain-specific SLMs that often outperform their larger counterparts. By leveraging these smaller models, Arcee AI provides high-quality, cost-effective, and energy-efficient AI solutions. This focus on SLMs translates into significant savings on compute and deployment costs and ensures that the models are highly specialized and effective for specific tasks.

Model Merging & Spectrum

Arcee AI has pioneered two major advancements in optimizing LLM training: Model Merging and Spectrum. Model Merging combines the “brains” of two models, enhancing their performance beyond what the individual models could achieve independently. This process mitigates catastrophic forgetting and significantly reduces training costs, allowing for frequent re-training with updated data.

Spectrum, another innovative technique, targets model training more efficiently by optimizing resource usage. It accelerates training by focusing on modules with high signal-to-noise ratios, effectively matching the performance of full fine-tuning while reducing GPU memory usage. This makes the training process faster and cheaper, aligning with Arcee’s commitment to cost-efficiency and high performance.

Continuous Pretraining Platform

Arcee AI’s platform offers an end-to-end solution for training, merging, and deploying SLMs. The platform supports continuous pretraining, which involves updating models with new knowledge through next token prediction on proprietary data. This approach ensures that the models remain current and highly specialized for their intended tasks. The integration of Spectrum into the continuous pretraining routines further enhances the model’s efficiency and accuracy, making it possible to train models with fewer iterations and less computational power.

Real-World Applications

The flexibility and efficiency of Arcee’s SLMs make them suitable for a wide range of real-world applications. These include real-time customer service automation, where the models can handle queries quickly and accurately, and edge computing scenarios, where their compact size and lower computational requirements are critical. Additionally, your custom Arcee models are ideal for organizations looking to implement cost-effective AI solutions, whether for rapid prototyping or on-premise deployment to enhance data privacy. One use case I am excited to see is the customization of SMLs as cheap routers for more expensive models. Using Arcee with popular projects such as RouteLLM would be amazing.

The Future with Arcee AI

Looking ahead, Arcee AI is well positioned to lead the way. By democratizing access to powerful, specialized AI models, Arcee is creating an environment where businesses of all sizes can leverage advanced techniques with only a few mouse clicks. The company’s commitment to using open-source general intelligence and enhancing it through model merging ensures that their models remain cutting-edge and adaptable to your individual challenges.

Recent SLMs

Lastly, if you are new to open-source Small Language Models, here are a few recent announcements. Most of these are already available on the Arcee platform.

- Meta Llama 3.1 8B - A powerful multilingual AI model featuring enhanced reasoning and a 128K context length for advanced applications.

- Google Gemma 2 2B - A compact model with 2 billion parameters, uniquely designed for efficient deployment across diverse hardware setups while outperforming larger models like GPT-3.5.

- Qwen 2 7B - A competitive language model that excels in various tasks, including language understanding, generation, multilingual capability, coding, mathematics, and reasoning.